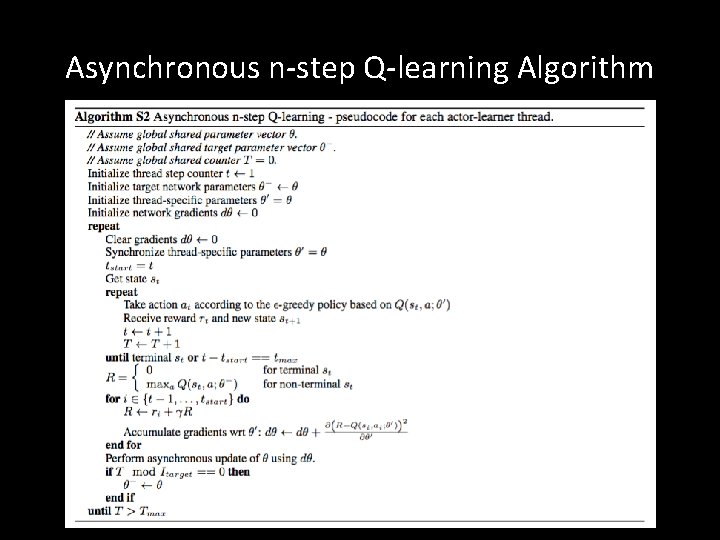

Are the final states not being updated in this $n$-step Q-Learning algorithm? - Artificial Intelligence Stack Exchange

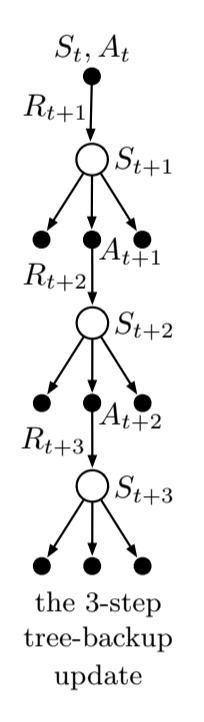

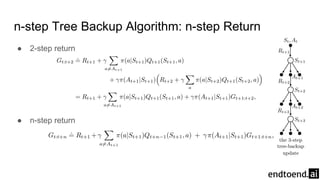

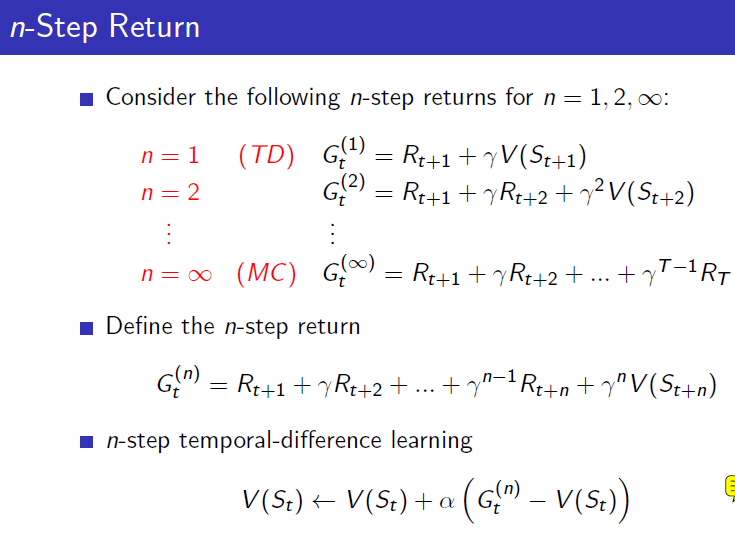

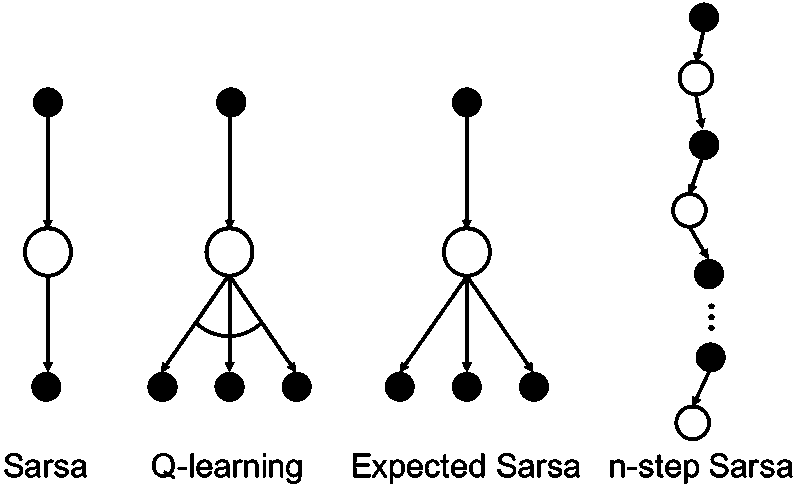

Reinforcement learning: understanding this derivation of n-step Tree Backup algorithm - Data Science Stack Exchange

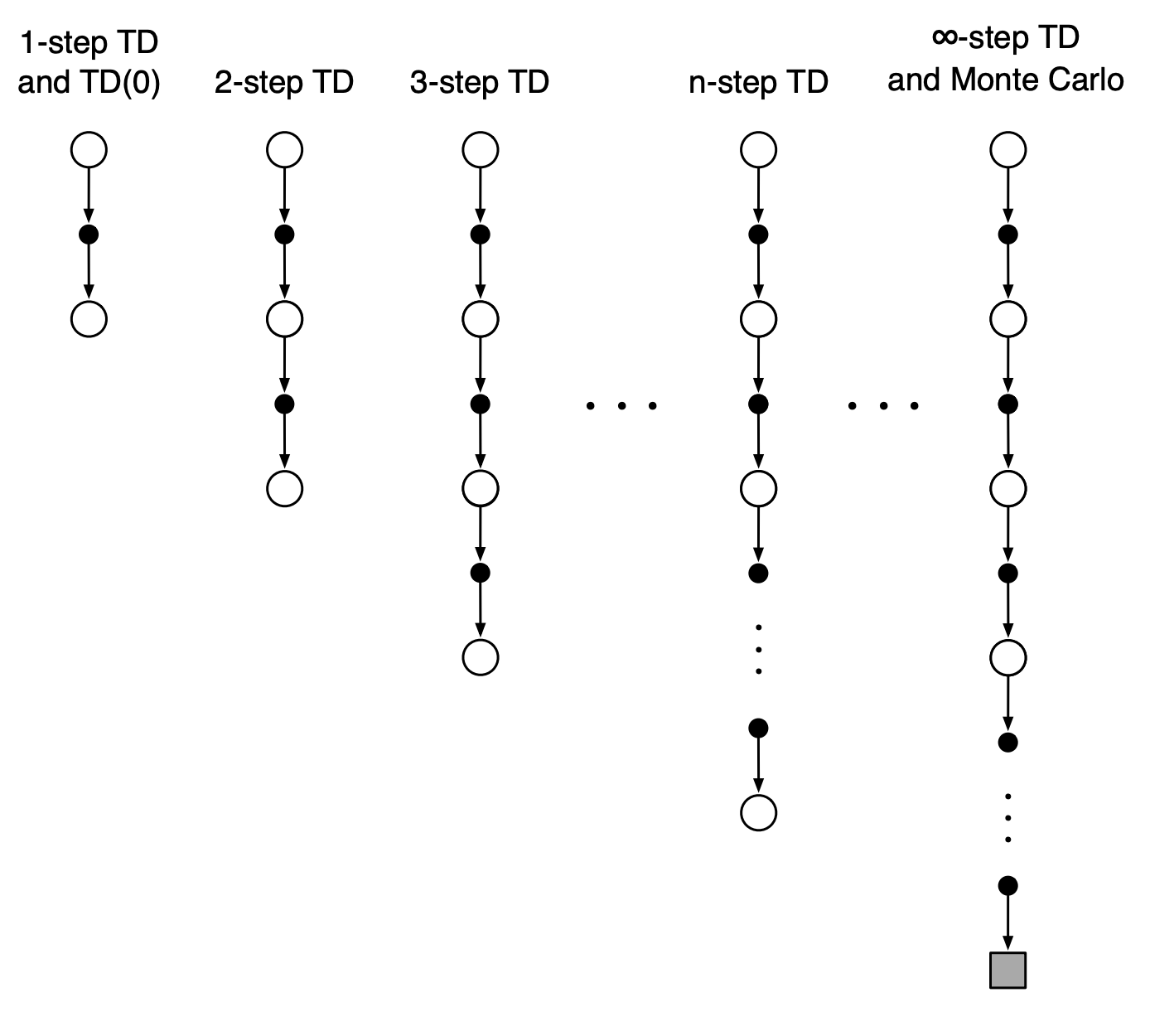

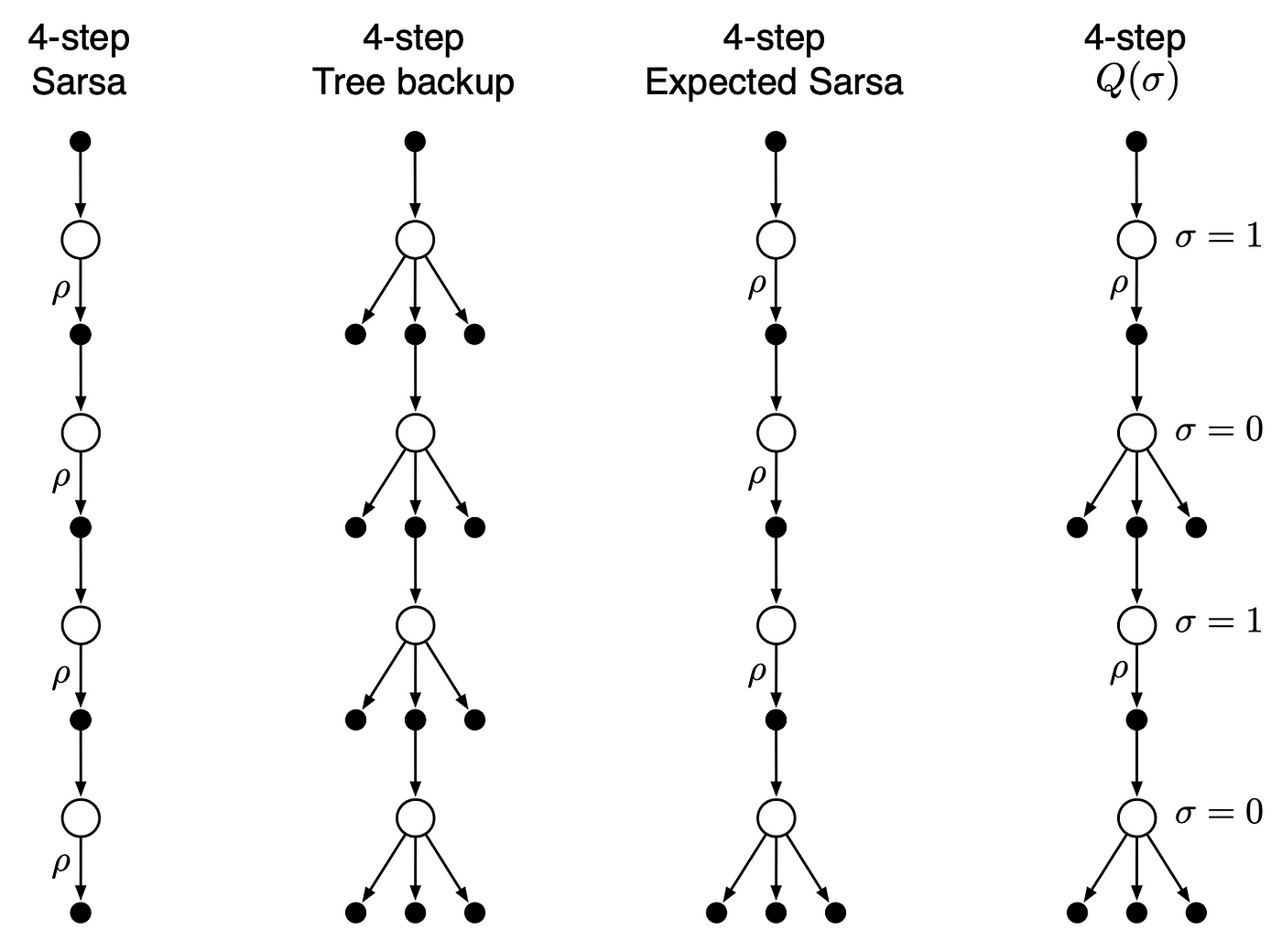

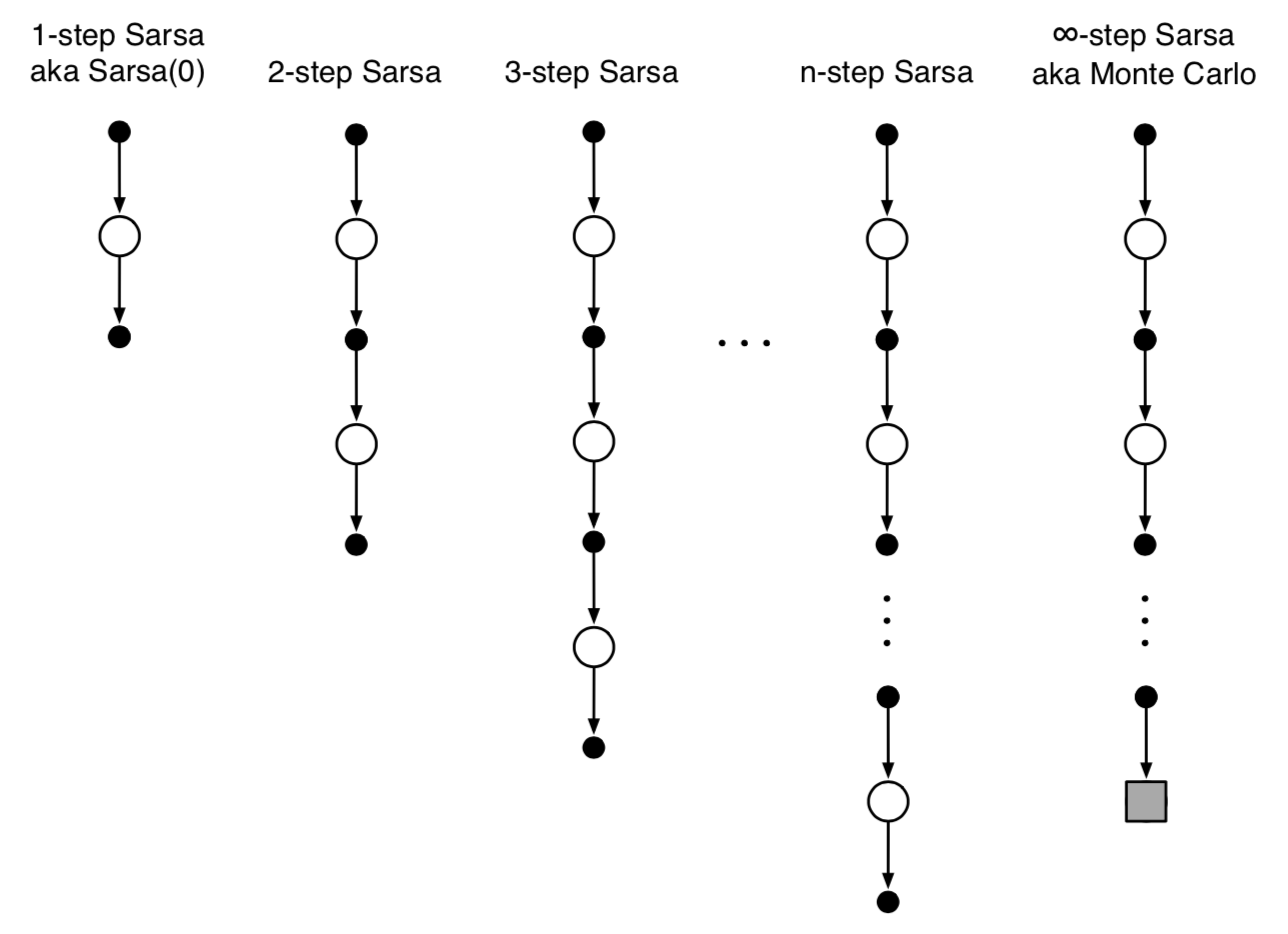

N-step TD Method. The unification of SARSA and Monte… | by Jeremy Zhang | Zero Equals False | Medium